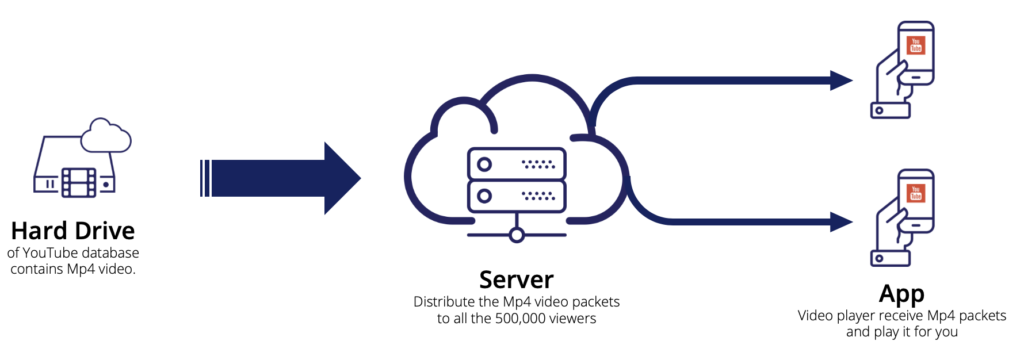

Your favourite star’s movie trailer released on YouTube and five hundred thousand fans, including you, are watching it simultaneously.

How does the system work?

When you open the YouTube app and click on the play icon, you are subscribing to the particular video for that session.

Then the server will seamlessly stream the data packets to all the subscribers in the ordered manner. Since it is 500K users, the server has to stream the one video to all of them in a different time (of course, everyone doesn’t start watching at the same time).

Following three are the main components of the YouTube application topology.- Video file saved in a hard disk

- YouTube app is a video player playing video

- The tricky part is the server, where all the distribution are happening

We know that YouTube is not a small organization. They invest a lot and lot on the development and retain the server to ensure seamless video streaming for any number of users.

Finally, what is the job of the YouTube video streaming server?It gets the data from one place in a format and disseminating to many (too many) subscribers in the same format.

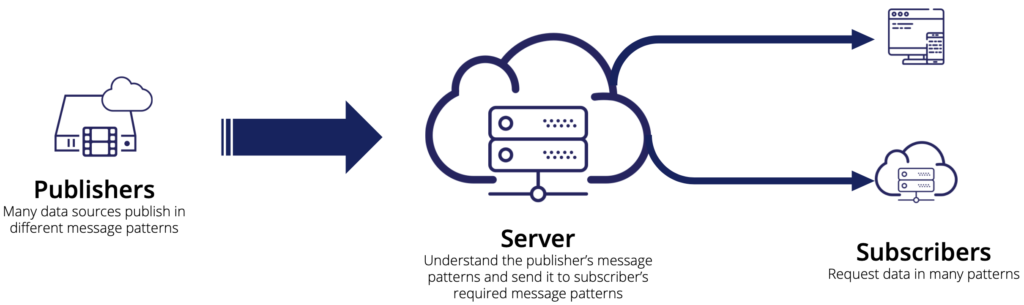

Let’s turn out to the data streaming.

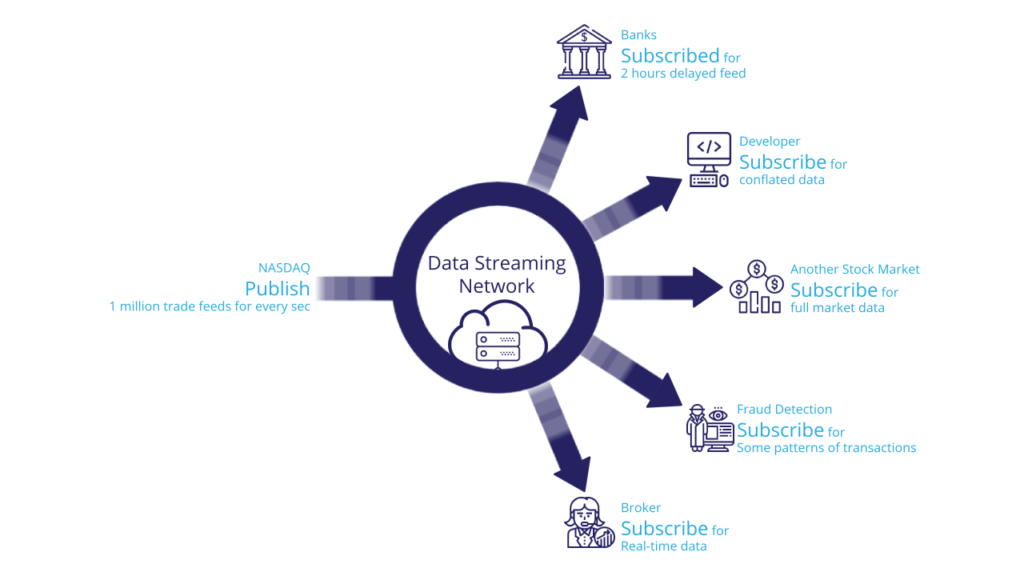

I’m well familiar with the stock market business. Data streaming is one of the pillars of this business. Let me explain the scenario.

Data Streaming in the stock market business.When a single stock of Tesla (TSLA) is getting sold, there will be a change that appears in all the prices and statistics in the market. Tesla stock is listed in NASDAQ (US stock market). So, NASDAQ will publish these details from their system to the rest of the world by their own protocol.

They will publish the details as a data feed. Such as, what is the current value of Tesla, the change that happened to whoever invested in Tesla, what is the impact and change that occurred in Apple stock, Google stock, etc. This feed consists of thousands of information because of a single purchase of a stock.

There are many trading happening every second, so that NASDAQ is publishing all the information as data feeds. It is typically 1 million pieces of information for every second.

I’m trading in London, and I’m only interested in Tesla data, a bank in Switzerland need accumulated data of Tesla and Google for the last 2 hour, Singapore Stock Exchange wants all the data to feed in real-time and a stockbroker in New York looking for all the Tesla’s data and Apple’s data together.

So every subscriber party having their own interest from NASDAQ. But NASDAQ can’t work for their interest. So NASDAQ only publishes all the information via its own protocol.

So, let’s put a smile on the face of all the interested parties. Build a data streaming network, and that will work for everybody’s interest. Where NASDAQ will publish the data to the Data Streaming Network, and any authorised parties can subscribe and get their interested details.

So what is the job of a data streaming network?

- Real-time (low latency) performance: Instant update of the data wherever the subscriber located and whatever the network bandwidth is

- Uninterrupted experience: Must detect drop-offs, redeliver messages, and process missed messages in a completely seamless manner

- Multi-Protocol support: Understand and distribute multiple protocols

- Security: Control who can send or receive data while integrating standard encryption protocols such as TLS, AES

- Global Distribution: International points of presence

But there are no third-party tools or an open-source tool to build an entire data streaming network. Engineers choose to use open source tools like Kafka and go for the experiment with expensive supports, such as every protocol has to have a connector with Kafka to communicate with Kafka.

There is a significant bottleneck in global distributions in such open-source tools. And also, though they choose such open-source tools, it is not possible to reach the ideal real-time performance with growing data quantity and real-time requirements.

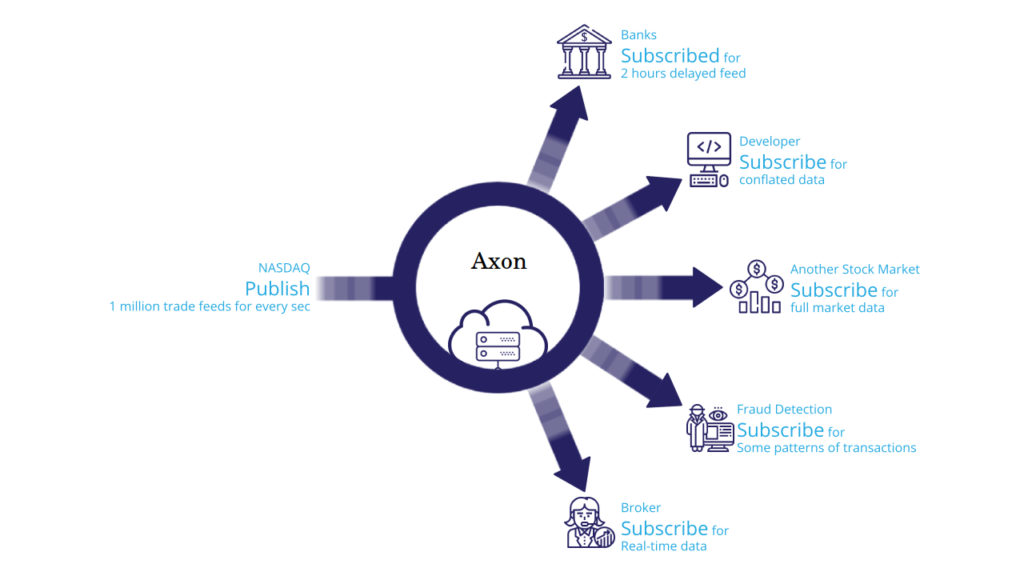

So is there any tools to accomplish the requirement of data streaming network?

Cerexio’s Axon message broker platform provide all the data streaming capability at a glance. Technically they are using to unique adaptive data compression and adaptive data distribution to accomplish all the data streaming capability in a way never before existed.

Another Big Question!

Data stream network is built by data streaming platform. It is a network for streaming the data from one location to another.

We all know, NYC to Singapore via Dubai transit flight is sometimes much cheaper than a direct flight. Similar to, getting data from NYC data centre to Singapore data centre via London data centre may faster than direct transfer due to network latencies.

While we are proposing low-latency & real-time, the data streaming network has to ensure the fastest delivery of the data. How is it working?

We would need to go to the second layer of the data streaming network.

That will be continued..!