Why will data streaming be the backbone of the robotic industry?

2019 , MIT Biomimetic Research Labs released a video with 9 of their Cheetah Robots.

“To stay balanced, the robots make about 30 decisions per second”. That is how they are stably browsing in the environment.

30 decisions are comparatively higher than any other commercial robots. Commercial robots usually take about 1 or 2 decisions every second. By the way, we can increase these numbers from 2 to 20 in commercial robots or 30 to 100 in research robots!. How can we do that?

This article is explaining the details in a simplified way, that can even understand by non-robotics peoples.

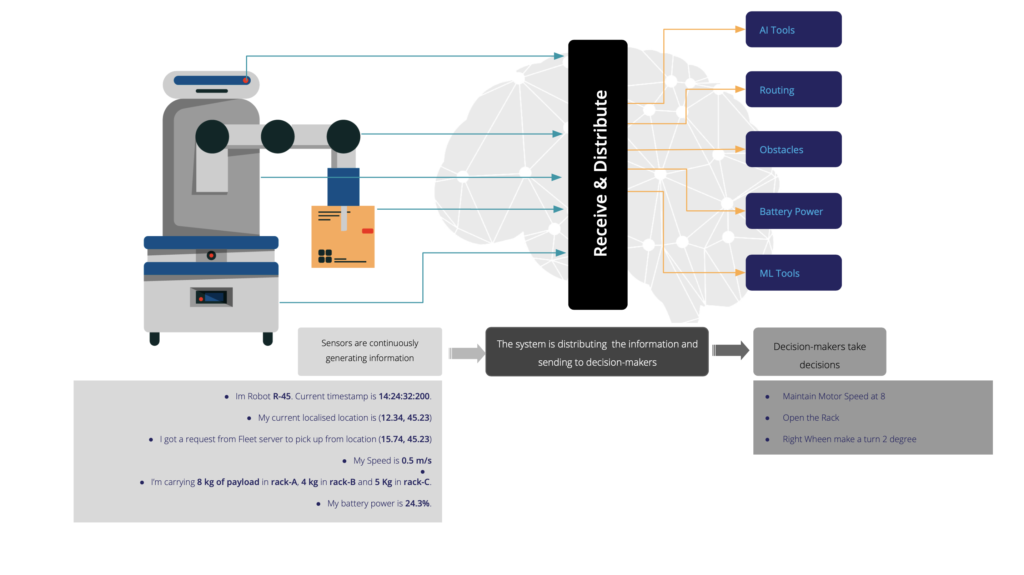

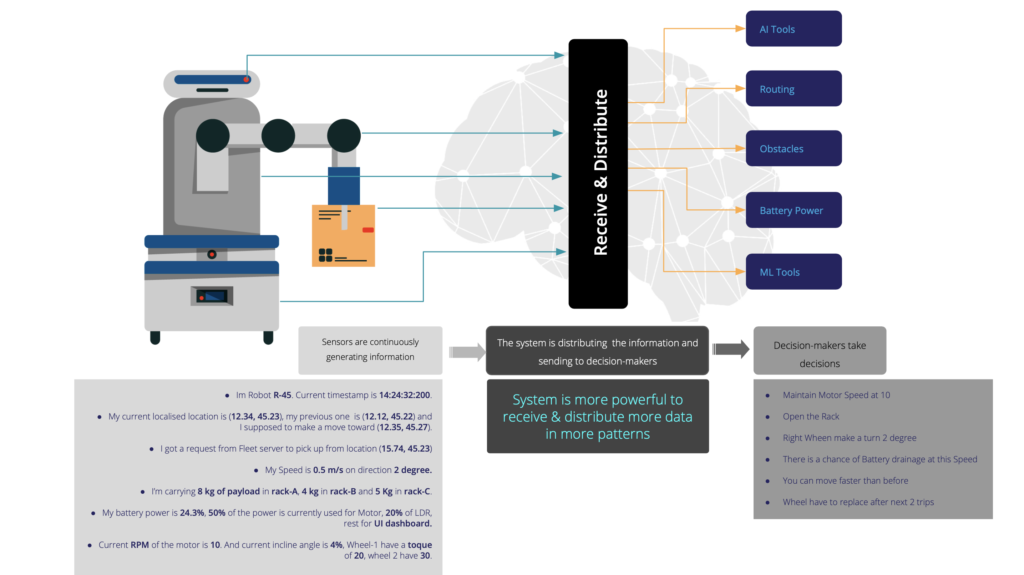

Robots have numerous sensors to produce data and sophisticated programs to make decisions, such as

- AI models to understand the environments

- Routing Algorithms to navigate robots

- Obstacle Detection for feed data to the routing algorithm

- And many more

Decision-makers are deciding every moment of each actuator. While a robot is moving toward a direction, if an obstacle found by the system, the robot has to change its behaviour. So, decision-maker will be informed to the robot, and it will stop or turn its direction.

If we are providing less information, then the robot will make fewer decisions. And if we offer much information, then the robot will make more. So the preciseness is all about how you supply the data to the decision making programs.

So, why can’t we increase the preciseness of the robot while sensors are producing enough data while there are enough decision-making models and programs to make decisions?

The main reason is restrictions and bottleneck of transmitting the data between sensors and decision-makers.

Yes! When we are increasing the number of sensors and actuators or increasing the number of robots, then the amount of data will be drastically improved. And that data is high throughput, high volume, and mostly in different format. For example, a proximity sensor, network camera, Laser sensor data patterns are different.

Current technology and mechanism are not allowing to handle the numerous information and transport massively to the decision-makers as it is. So the systems are compromising the quantity of information such as reducing the throughput and reducing number of informations.

If we can bring more data to the decision makers in real-time, we can resolve many problems such as

- Make robots more precise on their operations

- Predict the fault in real-time and arrange precautions

- Get maximum utilisation of robots

- Add more intelligent to the robot system

- Maintenance will become so easy

- Etc.

As we discussed, the transportation of high throughput data in real-time is not easy. Because the data are in multiple formats (multiple-protocols), because different decision makers and sensors send or accept different format of data.

There must be a highly extensible data broker to handle all of the issues.

Check this out.

www.cerexio.com

Cerexio’s message broker (streaming) platform to stream large volumes of data in real-time. They deliver your data faster than you thought possible. And it is regardless of throughput, regardless of patterns and many

For example, If you want to stream the live network camera feed from your robot (ROS — Robot Operating System) to your server in a remote locations. You will have several limitations like network bandwidth, frame rate, video quality, video protocol, & network latency.

There are few open-source and 3rd party technologies and solutions available for video streaming. But you can only achieve a maximum of 8 fps with 1 Mbps bandwidth usage, and the least delay would be around 5 seconds.

But Cerexio’s axon stream provides 24 fps in 1 Mbps bandwidth utilisation and the latency is only 800 millisecond.