What does it mean to allow Data Standardisation in the new age?

Data standardisation is a result-oriented data management strategy that fuses disparate datasets into an easily accessible data format to allow multiple users to tackle actionable data collaboratively. Just as the name suggests it transforms the diversified data flows to fall unto a ‘standard format’ before loading them to the target systems. It is like translating words from different languages into a universal language that everybody understands. With industries soaring towards digital transformation, data standardisation is redefined from being a ‘value-adding feature’ to a ‘quintessential capability’. With the explosion of business transitions empowered by IIoT, industrial IT systems are cornered to have data standardisation abilities definitely. Adopting software quickly became compulsory after industries were redefined by industry 4.0 influences; in the new age, the need for adopting cyber-physical systems (CPS) is seen to be mandatory. CPSs faded out traditional boundaries of industries and welcomed open markets, extensive ecosystems, omnichannel models- all generating an abundance of data. This is why data standardisation must be allowed in the new age.

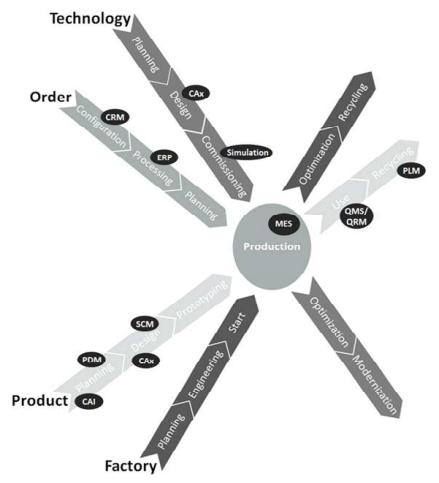

To further elaborate on data standardisation let us take a contemporary, hypothetical example. Imagine your company exerts digital transformative measures by upgrading your traditional physical systems to hybrid systems. For instance, you are transforming them into the extended manufacturing network illustrated in the diagram below.

As you can see your IT systems will be extremely complicated and all actions and operations at every level would affect your company’s productivity. Your data systems will be overloaded with data rooted by complex modular systems and modern technologies like Edge Computing, Predictive Analytics, AI, Data Science, Blockchain Technology, Robotics, VR and the list goes on. Therefore, as much as this upgrade is deemed to be vitally important, you will also have to be well-geared to manage data generated from a diversity of different landscapes.

You will ask yourself ‘How can I process my amplitude of data if they are in different types and flowing in from various distinctive systems?’. This is where you will feel why data standardisation is indispensable. Through data standardisation you can unlock the peak of innovative potential by uniformly extracting data, transforming them to a standard format and re-routing them to target users in the most efficient manner while enabling relevant, healthy synergies within your ecosystem.

Benefits of Data Standardisation in the Industrial Realm

Your company- and all companies at large- are regularly forced to adapt every day. These disruptions cause you to rely on multiple technologies and solutions which are all interconnected via a network of data streams. Data standardisation is a transformative data strategy that has the propensity to pull data from all these systems and transform them into a singular normalised format to streamline the data processing, actioning, analysing and sharing in a timely and efficient manner; this is why you must definitely consider welcoming this feature to your data network. Manufacturers and engineers use smart technological solutions to enhance IT systems to have the capability of monitoring, securing and automating end-to-end production chains; the trust they place in technology is validated through data standardisation! Because this feature elevates agility, openness and productivity by allowing modern solutions to automatically configure themselves through safe integration while being compliant to business and legal standards.

Utilising Data standardisation increases the accuracy of your business data models by giving them the ability to rescale various data types to a commonly actionable version. Using data models empowered by reliable data transformation algorithms and laggard-free features to enable data, standardisation has many benefits, such as:

- Ensures optimal downstream of data flows

- One of the best ways to identify the relevance and authenticity of data

- You can spend your time yielding data instead of doubting it.

- Less supervision will be needed in data oversight and resource allocating.

- Streamlines the transition towards sector-based platforms

- It is mandatory for vertical and horizontal system integration.

- Data Standardisation eliminates fluff data.

- Elevates the data traceable capabilities

- Reduces the time taken to comply data with submission and regulatory standards; expediting the process of creating analysis parameters

- Intensify statistical and analytical capabilities of the organisation

- Data study build-up duration will be minimised through automated Electronic Data Capturing (EDC) features.

- Ensures consistency in end-to-end data processes through uniform format transition

- Reusable data mapping tools can be enabled since the complexity of data will be conveniently mitigated.

- Disaggregated datasets are not helpful in measuring progress and limitations in data management; it aggregates data.

If you are not comprehensive about the benefits of data standardisation and optimal data management, you will oversee your company’s substandard performances. Some of the limitations of your ecosystem that can be ignored if your software taxonomy is not upgraded with an industry 4.0 data-standardising solution are:

- The inefficiency and latency in multi-handling data streams rooting from multiple protocols

- Uneventful mismanagement of leads- when routing, segmenting and scoring.

- Manual data handling would be immensely detrimental for it overrides the advantages of automated pipeline handling.

How can your company achieve industry 4.0-qualified Data Standardisation?

Companies must employ well-designed advanced data queuing protocols to enable data standardisation through message-oriented middleware. Software vendors create integration brokers- an intermediary data transformation module- to standardise every single data stream to a formally-designed version via formal messaging protocols. This solution mediates between clusters by validating, routing and transforming data to effectively implement decoupling to optimise decentralised data ownerships. This tool emits data standardising features by extracting copious data sets and transforming them into a common alternative representation while simultaneously easing the operation of your corporate consortia.

Thus, to make things even easier, Cerexio, an outstanding technology solution provider in Singapore, introduced the most updated and advanced message broker to the market. This proprietary Cerexio solution, namely Cerexio Axon Multi-protocol Message Broker, is the foremost reactive data pipeline solution to use a scalable unified Big Data Management Platform. Your clustered data environments can exploit the technological luxury enabled by this solution to swiftly move data to a shared platform to exert data standardisation processes in a timely manner. Cerexio Axon Multi-protocol Message Broker implements multiple protocol drivers (industrial machines), applications, and software systems to enable data standardisation. It serializes the data from different endpoints and utilises advanced data storing tech to store them in a distributed management environment.

What can your organisation achieve by adopting Cerexio Axon Multi-protocol Message Broker?

Data Standardisation using the aforementioned Cerexio solution will allow your data scientists and data analytics team to build transformative and operative models with excellent visualisation powers easily. Normally, industrial giants adopt highly modular IT systems developed by a surfeit of vendors, which spurts a miscellanea of datasets. These mass data streams can be handled flawlessly with perfect synergy with open-ended protocols with this message broker. Cerexio Axon Multi-protocol Message Broker can optimise the use of Artificial Intelligence, Machine Learning and Natural Language Processing capabilities allowing your BI systems to get more out of data within less time.

This solution has the propensity to allow persistent and uninterrupted data flow between your clusters since it has been upgraded with smart data streaming technologies. Enterprise-level multi-data set delivering and conflated data dissemination has never been made easier than by adopting Cerexio Axon Multi-protocol Message Broker. It ensures 100% persistence of data flows in real-time and is fascinatingly multifaceted to supercharge your domains such as Finance, Manufacturing, Research and Development, Logistics and Supply Chain, Telecom and more. This solution is clean of all technological agnostics and is compatible regardless of the buzz of your data traffics. It can be effortlessly introduced as the fit-to-all data standardising tools for industrial data tackling in the digital age. Also, this tool would allow you to take confident initiatives to digitally transform.